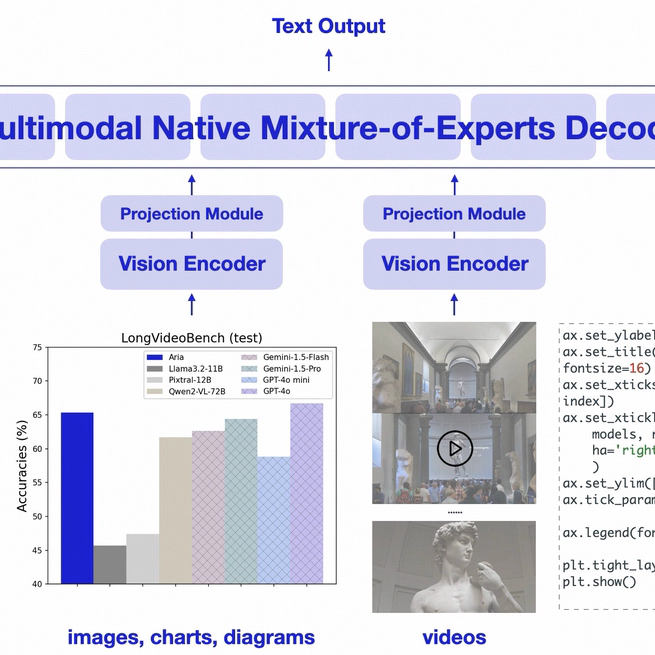

Aria (1000+ ⭐)

Aria is a multimodal native MoE model. It features:1️⃣State-of-the-art performance on various multimodal and language tasks, superior in video and document understanding; 2️⃣Long multimodal context window of 64K tokens; 3️⃣3.9B activated parameters per token, enabling fast inference speed and low fine-tuning cost.

Oct 10, 2024

MPP-Qwen-Next (400+ ⭐)

The Repo supports {video/image/multi-image} {single/multi-turn} conversations. All 7B/14B llava-like training is conducted on 3090/4090 GPUs. To prevent poverty (24GB of VRAM) from limiting imagination, I implemented an MLLM version based on deepspeed Pipeline Parallel.

Dec 25, 2023